Since the spread of COVID-19 was first reported, researchers

of all types have mobilized to meet the challenges its causative agent,

SARS-CoV-2, presents to the world. Data scientists in particular have been

quick to apply their expertise to the problems of identifying, tracking and

predicting outbreaks; diagnosing COVID-19; identifying infected individuals and

detecting non-compliance with virus countermeasures; discovering new

therapeutic interventions or repurposing existing ones; and searching for a

safe, effective vaccine.

We’re learning more about SARS-CoV-2 and COVID-19 every day,

and researchers are becoming more sophisticated in their exploration of

everything from the virus’s basic biology to improving patient outcomes. Almost

every question they ask requires information from multiple sources to be found

and integrated, and almost every time a question is answered, it prompts

another question that requires information from yet another source.

The process of assembling data and information to answer

significant research questions usually begins with researchers assessing:

- What data are available? Do the required data exist at all?

- How can the data be accessed once we find them? What rights do we

have to use the data? - Are the data and metadata understandable? Can we put them all

together in a meaningful way? - Are all the data valid, or are there outliers or duplicates to

eliminate?

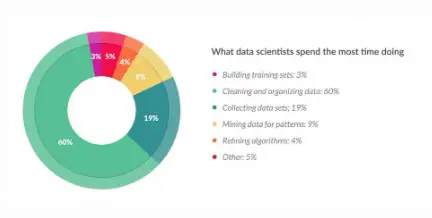

This iterative process of finding information in all of the places it resides, bringing it together, cleaning it up and organizing it can take up much of a data scientist’s time, perhaps as much as 80% of their time according to a 2016 survey. The remaining 20% is spent more productively by actually using the data for analysis or for training predictive models. The diagram below depicts a typical data science workflow on a timeline.

https://www.forbes.com/sites/gilpress/2016/03/23/data-preparation-most-time-consuming-least-enjoyable-data-science-task-survey-says/

This process of putting data together to enable the analysis and modeling that lead to insight is usually slow and tedious because the majority of the data available to researchers today is not FAIR, meaning that the data and metadata typically do not adhere to the FAIR Guiding Principles of Findability, Accessibility, Interoperability, and Reusability. Adherence to the FAIR Principles makes data more easily reusable, so that they efficiently can be applied to any purpose, even unanticipated ones, compressing time-to-insight and increasing the inherent value of the data. Putting the effort into FAIRifying data to make them efficiently reusable allows for quicker results over a broader range of applications.

At Elsevier, we’re committed to helping scientists and clinicians find new answers, reshaping human knowledge and tackling the most urgent human crises—and we believe that data and information are the keys to success. We are proud to support efforts to help researchers make use of the FAIR Principles to be the best data stewards they can be. We are particularly proud to have participated in the development of the Pistoia Alliance’s FAIR Toolkit, freely available to all data stewards, laboratory scientists, business analysts and science managers.

The FAIR Toolkit contains use cases to help life sciences researchers better understand FAIR Data and how to FAIRify their own data. It also provides access to FAIR tools and training, as well as containing information to help organizations manage the change that adherence to the FAIR Principles requires. Like many of the organizations we serve, we at Elsevier, and within the Entellect team, have made a commitment to FAIR Data and encourage researchers to check out the Pistoia Alliance’s FAIR Toolkit to learn more.